After testing a data import feature, I was able to significantly improve a function that is called many, many times.

The PHP application in this case uses double-writing because it is a progressive migration of a legacy.

The data to import for the feature comes from a file and, for each line, the processing will create entities that must be passed on to the legacy via double-write.

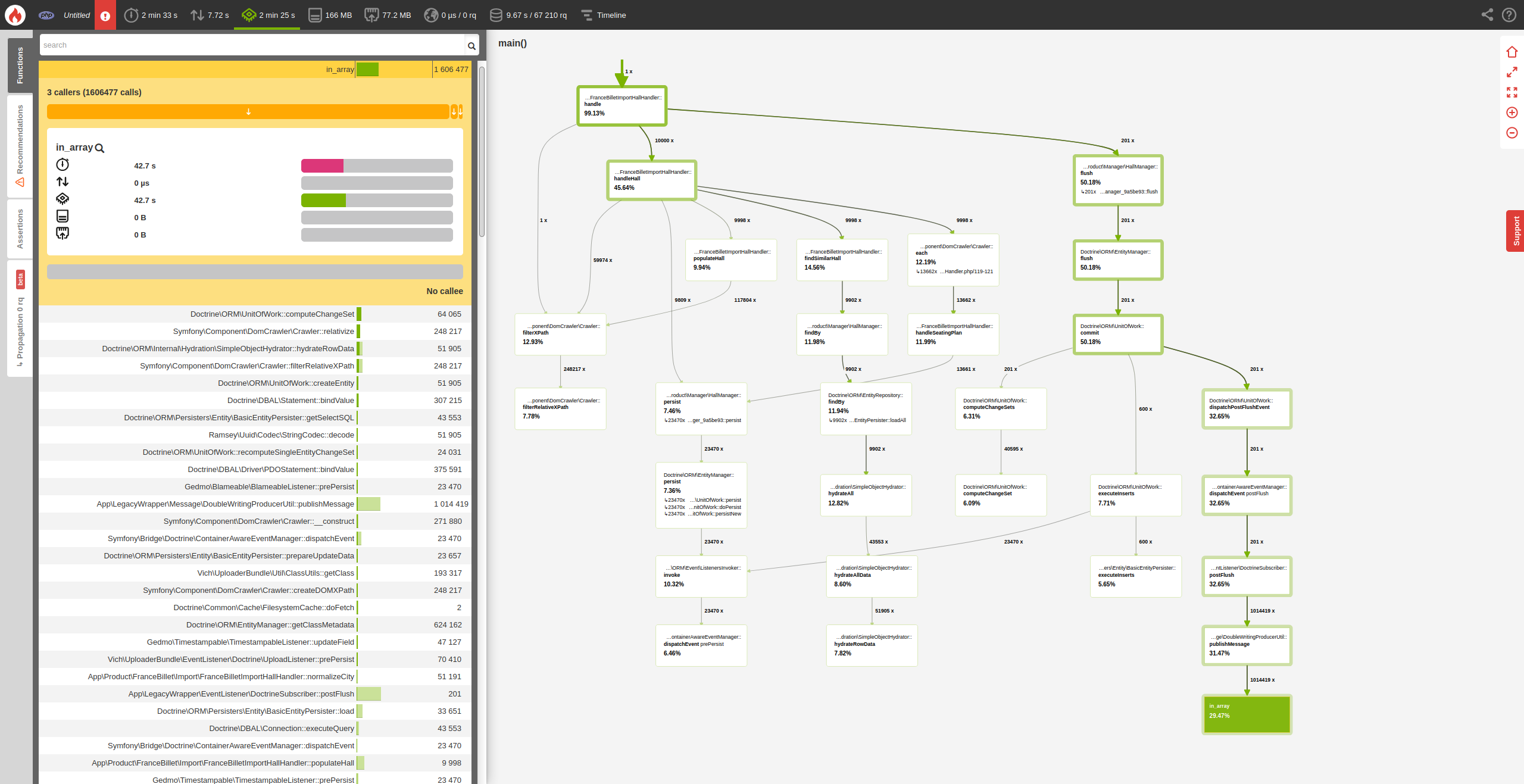

Here is a Blackfire profile when I limit to 10k lines:

We can see that the in_array() function is called more than a million times in a double-write service and takes ~29% of the CPU time alone.

If we take a look at the calling code :

public function publishMessage(?string $message, $queue = SyncLegacyDBConsumer::SYNC_QUEUE): void

{

if (null !== $message

&& !\in_array($message, $this->publishedMessages, true)

) {

$this->serviceLocator->get(sprintf('old_sound_rabbit_mq.%s_producer', $queue))->publish($message);

$this->publishedMessages[] = $message;

}

}

We see that $this->publishedMessages is browsed each time a new message is added to avoid having duplicates and the table becomes quite large so it takes much more time to browse it. We are therefore in O(n).

The idea is to turn the array into a dictionary using a unique key for each message: the message itself.

So we now have a dictionary where we can directly try to access the message to see if it is already inserted via isset() which is very fast in PHP. So we are in O(1) in access time.

The code becomes as follows:

public function publishMessage(?string $message, $queue = SyncLegacyDBConsumer::SYNC_QUEUE): void

{

if (null !== $message

&& !isset($this->publishedMessages[$message])

) {

$this->serviceLocator->get(sprintf('old_sound_rabbit_mq.%s_producer', $queue))->publish($message);

$this->publishedMessages[$message] = true;

}

}

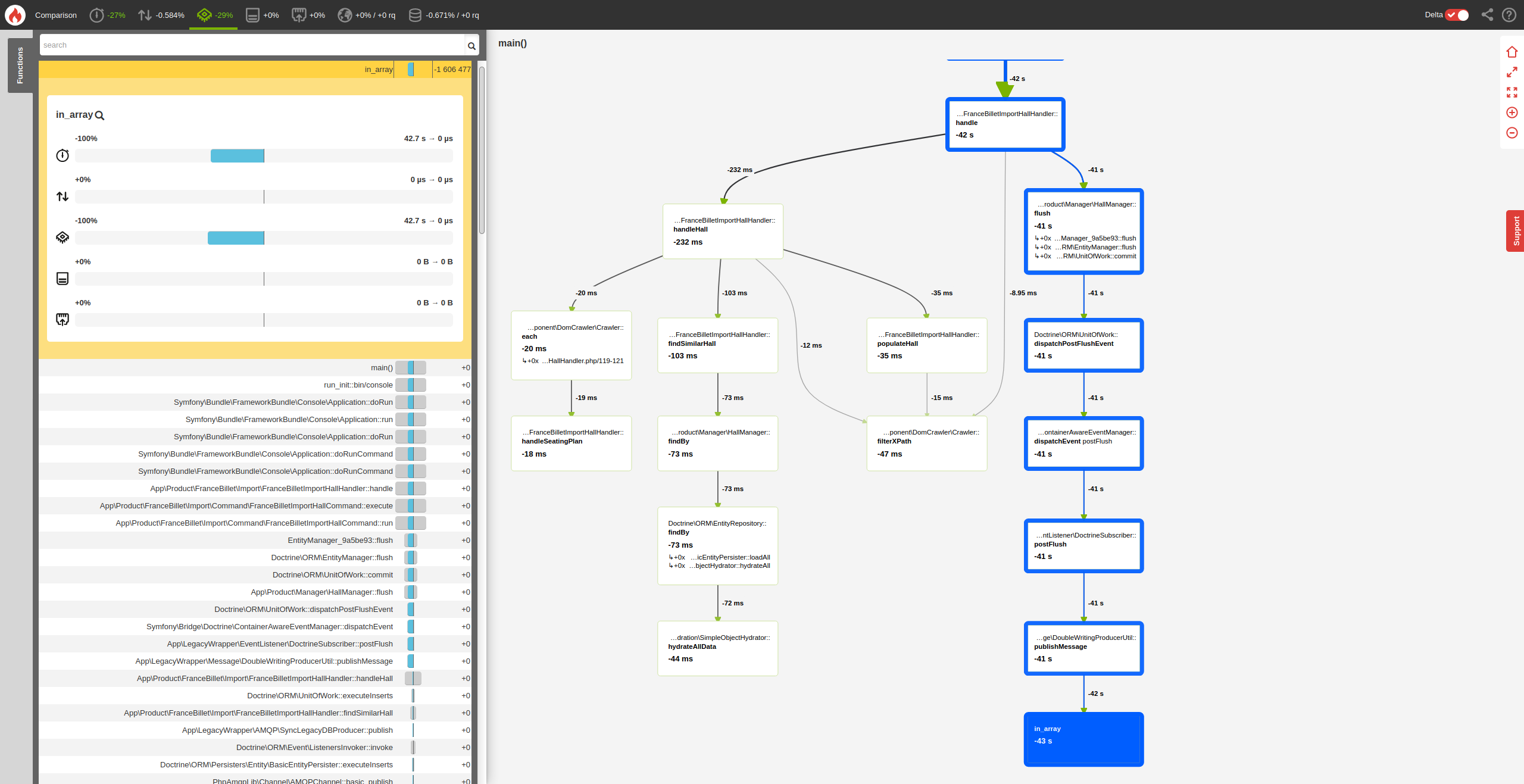

If we run a Blackfire profile again, it gives:

In this example, we saved ~29% of CPU time.

Thomas Talbot

Thomas Talbot